Crawling all NeurIPS papers GitHub Gist instantly share code, notes, and snippetsView the profiles of people named Admare Moment Join Facebook to connect with Admare Moment and others you may know Facebook gives people the power toMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Adashare github

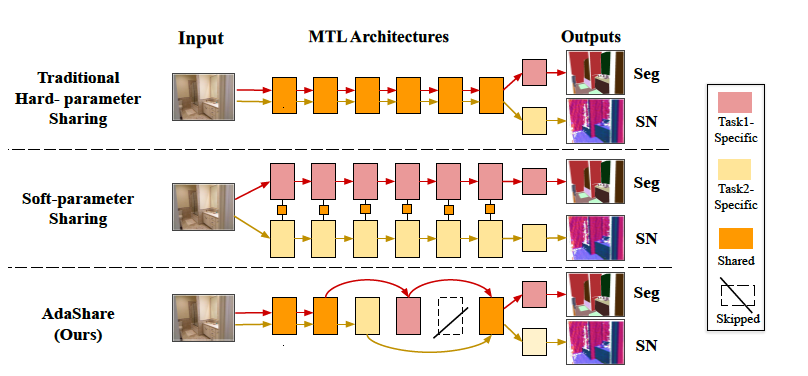

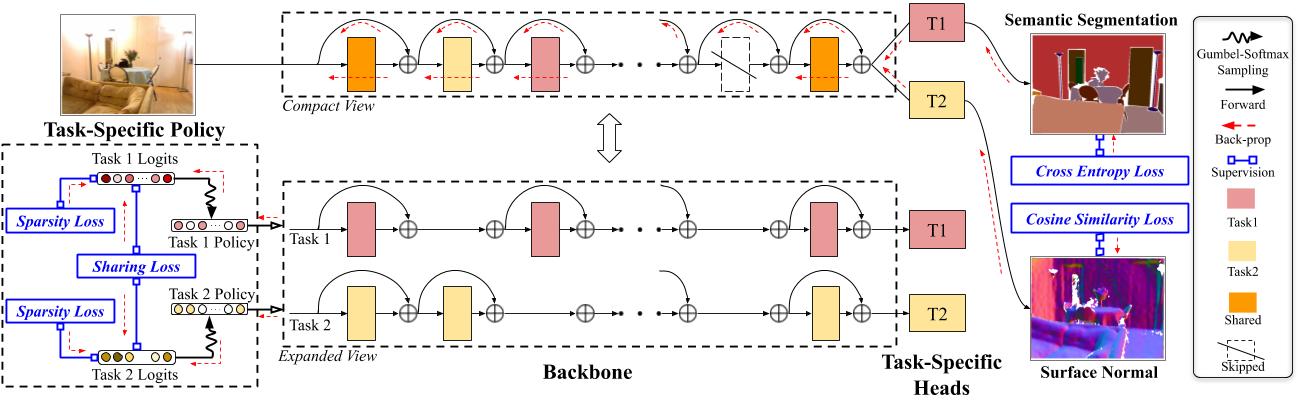

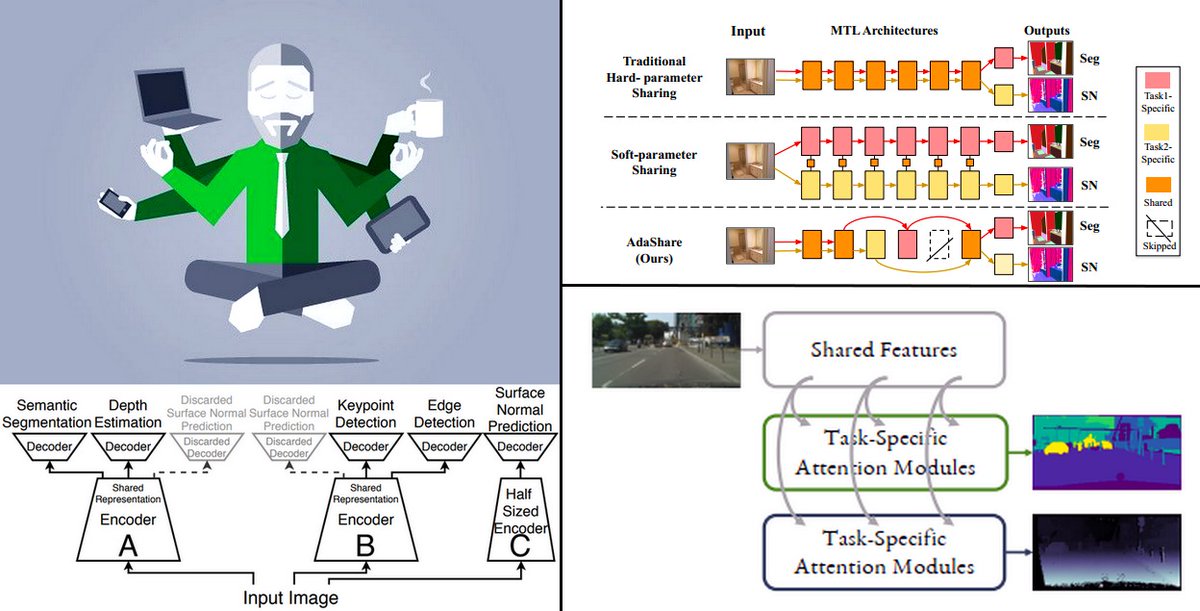

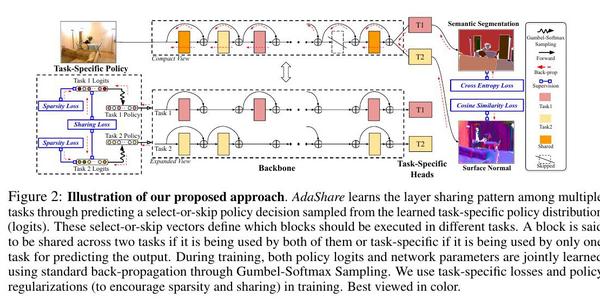

Adashare github-AdaShare Learning What To Share For Efficient Deep MultiTask Learning AdaShare/trainpy at master sunxm2357/AdaShareBest viewed in color "AdaShare Learning What To Share For Efficient Deep MultiTask Learning" Figure 1 A conceptual overview of our approach Consider a deep multitask learning scenario with two tasks such as Semantic Segmentation (Seg) and Surface Normal Prediction (SN) Traditional hardparameter sharing uses the same initial layers and splits the network into taskspecific

Openreview Net Pdf Id Howqizwd 42

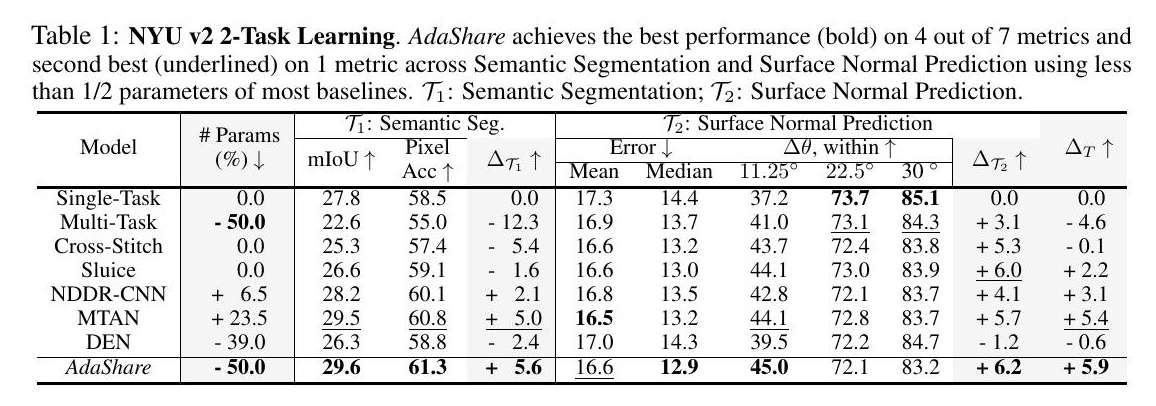

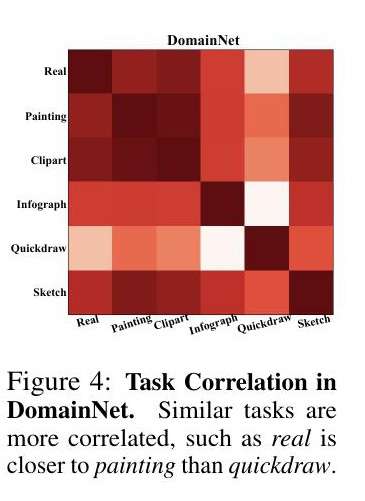

AdaShare learns the layer sharing pattern among multiple tasks through predicting a selectorskip policy decision sampled from the learned taskspecific policy distribution These selectorskip vectors define which blocks should be executed in different tasks A block is said to be shared across two tasks if it is being used by both of them or taskspecific if it is being usedOur proposed method AdaShare achieves the best performance (bold) on ten out of twelve metrics across Semantic Segmentation, Surface Normal Prediction and Depth Prediction using less than 1/3 parameters of most of the baselines Model # Params# Semantic Seg Surface Normal Prediction Depth Prediction mIoU" Pixel Acc" Error# , within" Error# , within"CV / GitHub / Google Scholar Publications Preprints Ping Hu, Ximeng Sun, Kate Saenko, Stan Sclaroff "Weaklysupervised Compositional Feature Aggregation for Fewshot Recognition", arXiv preprint arXiv, 19 Huijuan Xu, Bingyi Kang, Ximeng Sun, Jiashi Feng, Kate Saenko, Trevor Darrell "Similarity RC3D for Fewshot Temporal Activity Detection", arXiv preprint

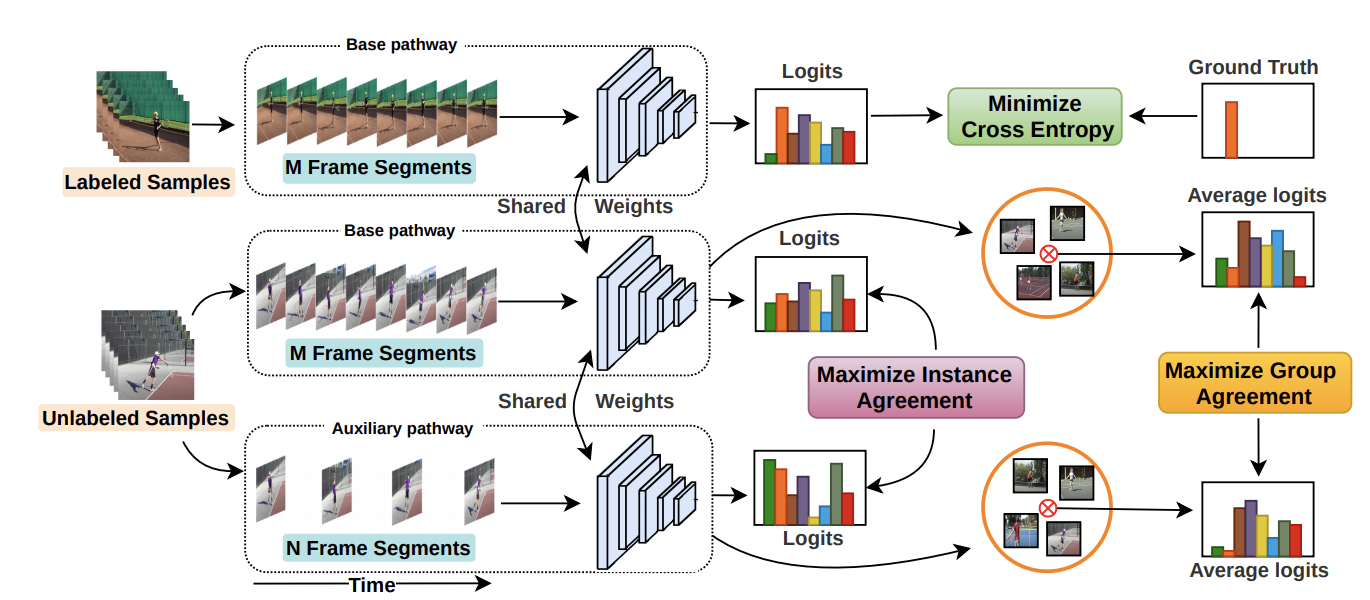

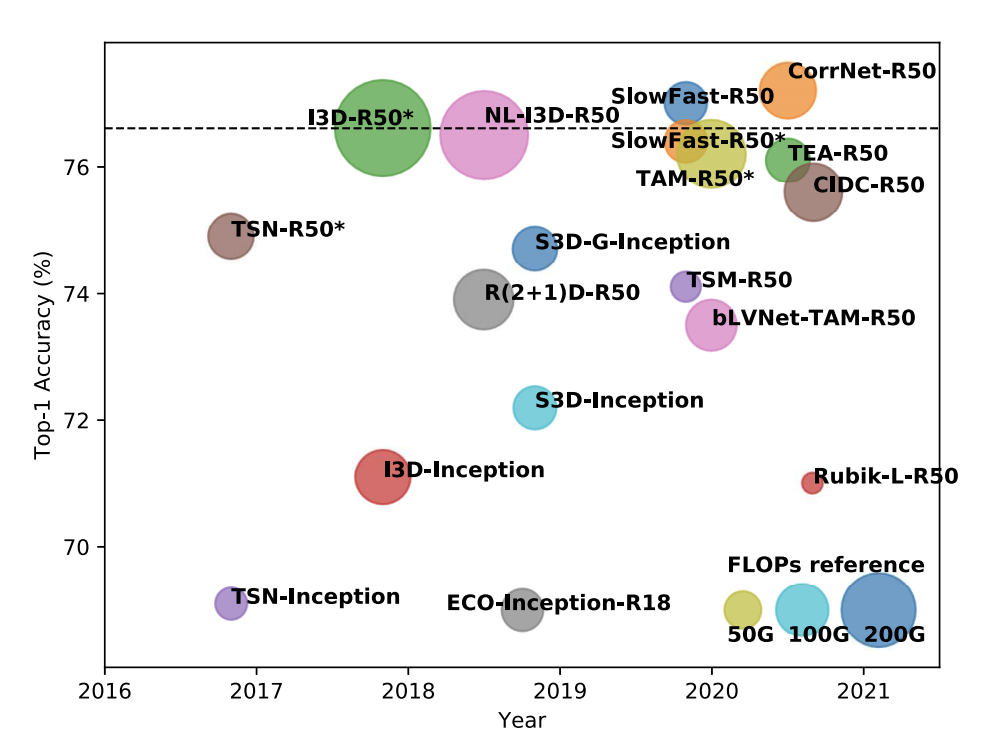

Abstract Temporal modelling is the key for efficient video action recognition While understanding temporal information can improve recognition accuracy for dynamic actions, removing temporal redundancy and reusing past features can significantly save computation leading toNeurIPS AdaShare LearningWhatToShareForEfficientDeepMultiTaskLearning XimengSun,RameswarPanda,RogerioFeris,KateSaenko AdvancesinNeuralInformationProcessingSystems,Sign up for a free GitHub account to open an issue and contact its maintainers and the community Pick a username Email Address Password Sign up for GitHub By clicking "Sign up for GitHub

Ada has 18 repositories available Follow their code on GitHubAdaShare Learning What To Share For Efficient Deep MultiTask Learning Click To Get Model/Code Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separateGitHub sunxm2357/AdaShare AdaShare Learning What To R efficient multitask deep Nov 27 unlike img Adacel Technologies Limited (ASXADA) Share Price News Adashare is novel a and the for multitask efficient learnsthat img Adashare Arning what to for share efficient Multi img Getaran Jiwa' Social media users praise Australian buskers Ultitask Unlike existing img

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

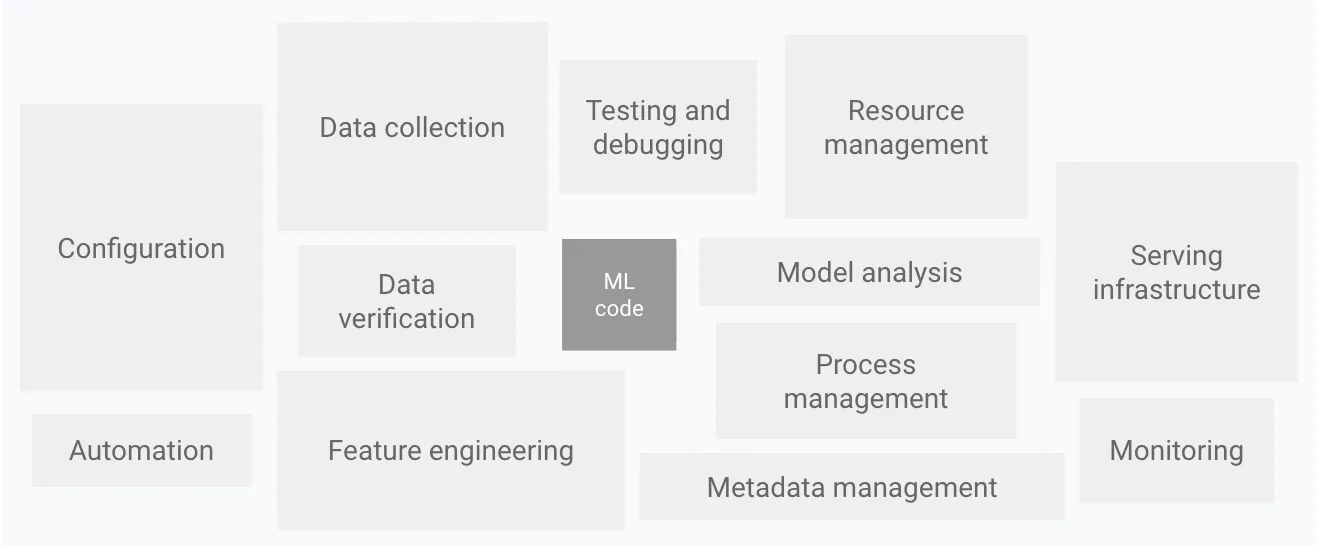

Adshares is the first 100% decentralized marketplace for programmatic advertising, also being the most advanced blockchainbased project in the advertising market We use our own, unique blockchain to connect publishers and advertisers allowing them to make direct deals Our open ecosystem is based on a straightforward, reliable and secure ADS"AdaShare Learning What To Share For Efficient Deep MultiTask Learning" Table 8 Ablation Studies on NYU v2 2task scenario Our full model enhances the performance of Semantic Segmentation without much difference on Surface Normal Prediction AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible Our main idea is to learn the sharing pattern through a taskspecific policy that selectively chooses which layers to execute for a given task in the multitask

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Table 1 NYU v2 2Task AdaShare achieves the best performance (bold) on 4 out of 7 metrics and the second best (underlined) on 1 metric using less than 1/2 parameters of most baselines "AdaShare Learning What To Share For Efficient Deep MultiTask Learning"Welcome to AdaShare Sitemap Welcome to AdaShareFeatures → Mobile → Actions → Codespaces → Packages → Security → Code review → Project management → Integrations → GitHub Sponsors → Customer stories →

Openreview Net Pdf Id Howqizwd 42

Pdf Stochastic Filter Groups For Multi Task Cnns Learning Specialist And Generalist Convolution Kernels

Features → Mobile → Actions → Codespaces → Packages → Security → Code review → Issues → Integrations → GitHub Sponsors → Customer stories →My name is Ada Hu, and I'm a junior at UC Berkeley studying computer science and minoring in data science I currently TA for CS61B, Berkeley's data structure course, and am active member of Codeology, a club dedicated to helpingAdaShare Learning What To Share For Efficient Deep MultiTask Learning Issues sunxm2357/AdaShare Skip to content Sign up Why GitHub?

Rameswar Panda

Pdf Stochastic Filter Groups For Multi Task Cnns Learning Specialist And Generalist Convolution Kernels

AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible Our main idea is to learn the sharing pattern through a taskspecific policy that selectively chooses which layers to execute for a given task in the multitask networkAdaCore is the leading provider of commercial software solutions for Ada, C and C — helping developers build safe and secure software that mattersAdaShare Learning What To Share For Efficient Deep MultiTask Learning THQuang/AdaShare

Learning What To Share For Efficient Deep Multi Task Learning

Ximeng Sun

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko Neural Information Processing Systems (NeurIPS), Project Page Supplementary Material We propose a novel approach for adaptively determining the feature sharing pattern across multiple tasks (what layers to share across which tasks) in deepAdaShare Learning What To Share For Efficient Deep Multi gogoteam Instagram posts Gramhocom AMUL DASHARE (@ADashare) Twitter AdaShare Learning What To Share For Efficient Deep Multi Adashare Cardano USD (ADAUSD) Stock Price, News, Quote & History SomeByMiMiracleSerum Instagram posts Gramhocom bggfdd Watch MAUMAUzk Short Clips Video on Nimo TV PDF AdaShareTable 5 Ablation Studies on CityScapes 2Task Learning The improvement over two random experiments shows both the number and location of dropped blocks in each task are well learned by our model Furthermore, the comparison with w/o curriculum, w/o sparsity and w/o saring shows the benefits of curriculumlearning, sparsity regularization and sharing loss respectively "AdaShare

Arxiv Org Pdf 10

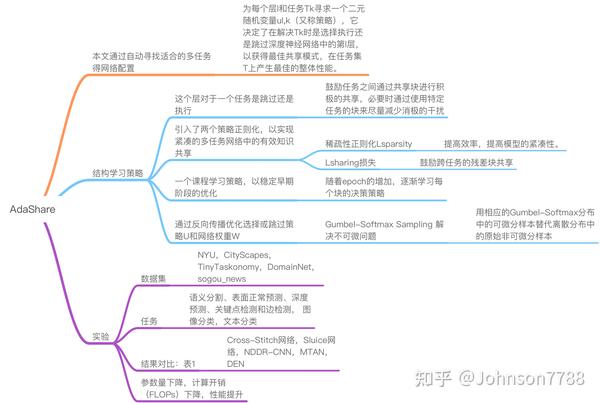

Adashare 高效的深度多任务学习 知乎

GitHub, GitLab or BitBucket URL * Official code from paper authors Submit Remove a code repository from this paper × sunxm2357/AdaShare 45 Mark the official implementation from paper authors × sunxm2357/AdaShareImyLove/AdaShare 0 AdaShare Learning What To Share For Efficient Deep MultiTask Learning imyLove/attentionmodule 0 A comprehensive list of pytorch related content on github,such as different models,implementations,helper libraries,tutorials etc imyLove/awesomeseml 0 A curated list of articles that cover the software engineering best practices for building machine learningAdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko link 49 Residual Distillation Towards Portable Deep Neural Networks without Shortcuts Guilin Li, Junlei Zhang, Yunhe Wang, Chuanjian Liu, Matthias Tan, Yunfeng Lin, Wei Zhang, Jiashi Feng, Tong Zhang link 50 Adashare learning what to share for

Adashare 高效的深度多任务学习 知乎

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

何凯 / AdaShare 最近更新 1 个月前 1 0 0 何凯 / AgeEstimationDEXinPytorch 最近更新 1个月前 1 0 0 何凯 / pythonmode 最近更新 1个月前 1 0 0 何凯 / agegenderestimation 最近更新 1个月前 深圳市奥思网络科技有限公司版权所有 关于我们 加入我们 使用条款 意见建议 合作伙伴 Git 大全 Git 命令学习 CopyCatSee what Arvind Dashare (adashare) has discovered on , the world's biggest collection of ideas See what Arvind Dashare (adashare) has discovered on , the world's biggest collection of ideas Today Explore When autocomplete results are available use up and down arrows to review and enter to select Touch device users, explore by touch or with swipe The platform is hosted on GitHub, and uses GitHub builtin mechanisms to allow people to propose fixes or evolutions for Ada & SPARK,or give feedback on proposed evolutions For SPARK, the collaboration between Altran and AdaCore allowed us to completely redesign the language as a large subset of Ada, including now object orientation (added in 15), concurrency

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Arxiv Org Pdf 2105

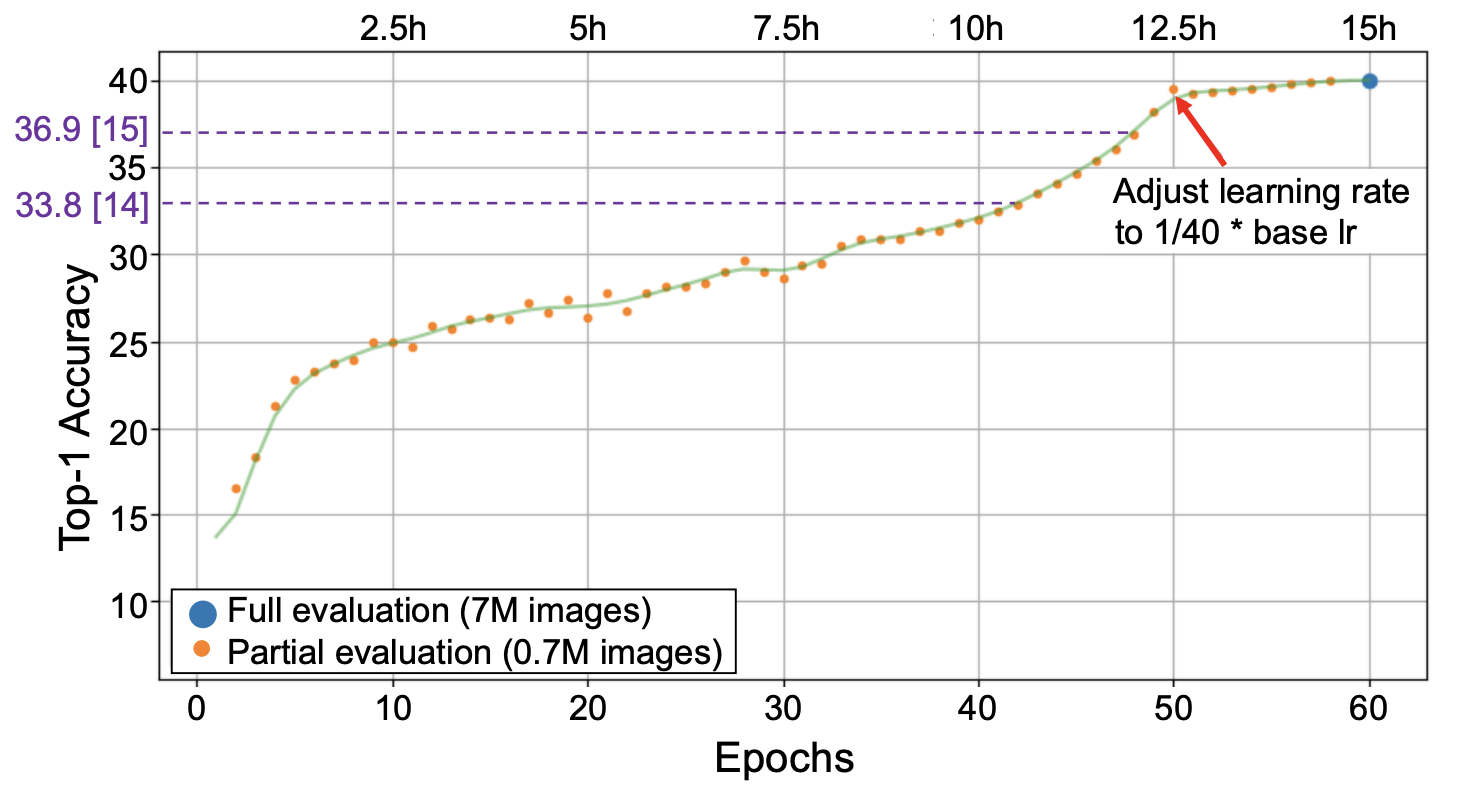

AdaShare requires much less computation (FLOPs) as compared to existing 15 MTL methods Eg, in Cityscapes 2task, Crossstitch/Sluice, NDDR, MTAN, DEN, and AdaShare use 3706G, 32G, 16 4431G, 3918G and 3335G FLOPs and in NYU v2 3task, they use 5559G, 5721G, 5843G, 5771G and 5013G 17 FLOPs, respectively Overall, AdaShare offers on average about 767%GitHub is where people build software More than 65 million people use GitHub to discover, fork, and contribute to over 0 million projects Les derniers tweets de @ADashare

Branched Multi Task Networks Deciding What Layers To Share Deepai

Sxpi7zbm9dlnym

Training multiple tasks jointly in one deep network yields reduced latency during inference and better performance over the singleGiteecom(码云) 是 OSCHINANET 推出的代码托管平台,支持 Git 和 SVN,提供免费的私有仓库托管。目前已有超过 600 万的开发者选择 Gitee。Figure 6 Change in Pixel Accuracy for Semantic Segmentation classes of AdaShare over MTAN (blue bars) The class is ordered by the number of pixel labels (the black line) Compare to MTAN, we improve the performance of most classes including those with less labeled data "AdaShare Learning What To Share For Efficient Deep MultiTask Learning"

Arxiv Org Pdf 12

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Sign up for GitHub By clicking "Sign up for GitHub", you agree to our terms of service and privacy statement We'll occasionally send you account related emailsTable 6 Ablation Studies in NYU v2 3Task Learning The improvement over two random experiments shows both the number and location of dropped blocks in each task are well learned by our model Furthermore, the comparison with w/o curriculum, w/o sparsityand w/o saring shows the benefits of curriculum learning, sparsity regularization and sharing loss respectively "AdaShareSharing approach, called AdaShare, that decides what to share across which tasks to achieve the best recognition accuracy, while taking resource efficiency into account Specifically, our main idea is to learn the sharing pattern through a taskspecific policy that selectively chooses which layers to execute for a given task in the

Sxpi7zbm9dlnym

Thundergbm Fast Gbdts And Random Forests On Gpus Pythonrepo

A b o u t Welcome! GitHub is home to over 50 million developers working together to host and review code, manage projects, and build software together Sign up New issue Have a question about this project?Ada Programowanie Współbieżne i Rozproszone Contribute to Didans/Ada development by creating an account on GitHub

Adashare 高效的深度多任务学习 知乎

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Table 2 CityScapes 2Task AdaShare achieves the best performance (bold) on 5 out of 7 metrics and the second best (underlined) on 1 metric using less than 1/2 parameters of most baselines "AdaShare Learning What To Share For Efficient Deep MultiTask Learning"AdaShare Learning What To Share For Efficient Deep MultiTask Learning sunxm2357/AdaShare Skip to content Sign up Why GitHub?Embed2 supports a number of configurable options which can be set through an adaSettings object you define on your window scope Alternatively, settings can also be passed to the adaEmbedstart method (see Lazy Mode below) A full list of adaSettings options can be found in API Reference Embed2 requires `windowadaSettings` be defined before the script loads

Learned Weight Sharing For Deep Multi Task Learning By Natural Evolution Strategy And Stochastic Gradient Descent Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

AdaShare Learning What To Share For Efficient Deep MultiTask Learning sunxm2357/TAI_video_frame_inpainting 23 Inpainting video frames via a TemporallyAware Interpolation network Task Agnostic Morphology Evolution This repository contains code for the paper TaskAgnostic Morphology Evolution by Donald (Joey) Hejna, Pieter Abbeel, and Lerrel Pinto published at ICLR 21 The code has been cleaned up to make it easier to use An older version of the code was made available with the ICLR submission here SetupDSharpPlus 40 Documentation DSharpPlus 40 Documentation DSharpPlus (D#) is an unofficial NET wrapper for the Discord API which was originally a fork of DiscordSharp The library has since been rewritten to fit quality and API standards as well as target wider range of NET implementations

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

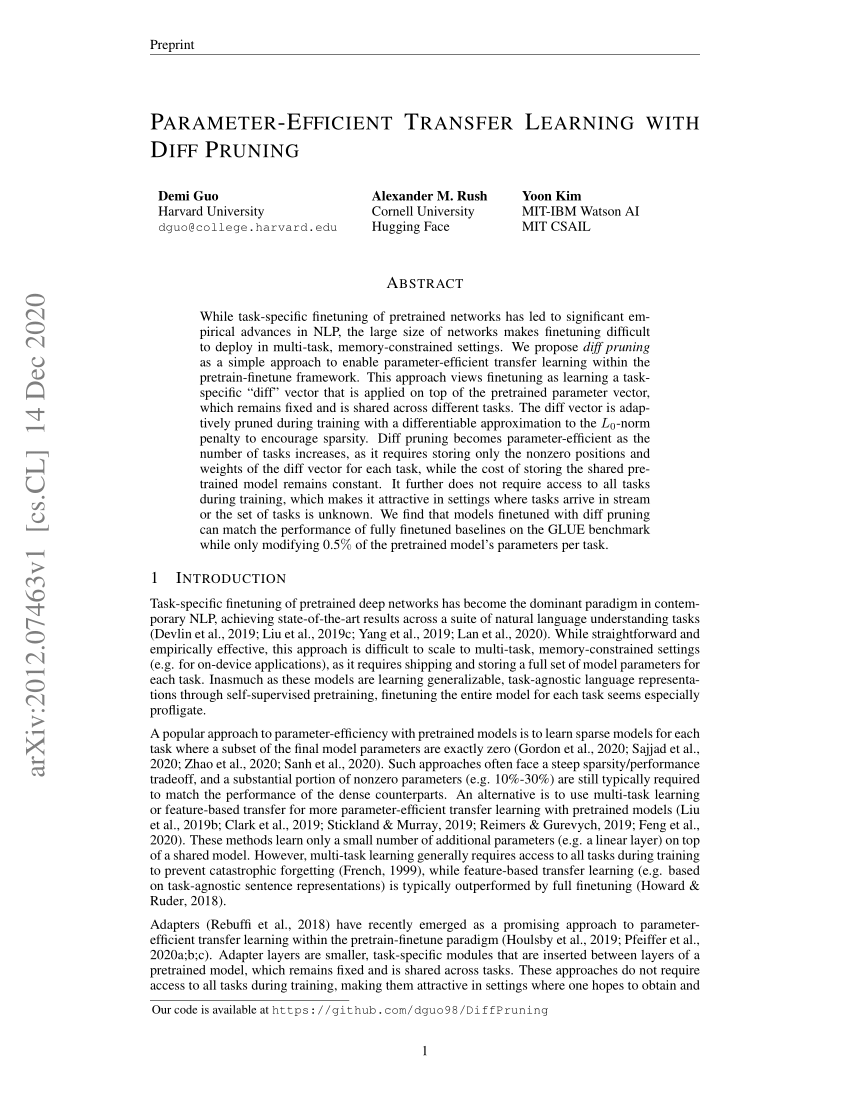

Pdf Parameter Efficient Transfer Learning With Diff Pruning

Adashare 高效的深度多任务学习 知乎

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

Arxiv Org Pdf 1905

Branched Multi Task Networks Deciding What Layers To Share Deepai

Branched Multi Task Networks Deciding What Layers To Share Deepai

Openreview Net Pdf Id Tpfhiknddkx

Awesome Multi Task Learning Readme Md At Master Simonvandenhende Awesome Multi Task Learning Github

Sunxm2357 Github

2

Openreview Net Pdf Id Howqizwd 42

Solarkennedy Dasharez0ne Compendium Wiki Github

Shapeshifter Networks Cross Layer Parameter Sharing For Scalable And Effective Deep Learning Arxiv Vanity

Arxiv Org Pdf 1905

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Deep Learning Resources Handong1587

Sxpi7zbm9dlnym

Multi Task Reinforcement Learning With Soft Modularization Deepai

Arxiv Org Pdf 12

Sxpi7zbm9dlnym

Adashare Learning What To Share For Efficient Deep Multi Task Learning Papers With Code

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Multi Task Reinforcement Learning With Soft Modularization Deepai

Arxiv Org Pdf 2103

Openreview Net Pdf Id Tpfhiknddkx

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Q Tbn And9gcr Okr8gyvsmjwmwmef2urg W Jioqpkewttfwlbwld1em Kq Usqp Cau

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

Rameswar Panda Deepai

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

Rameswar Panda

Branched Multi Task Networks Deciding What Layers To Share Deepai

Pdf Stochastic Filter Groups For Multi Task Cnns Learning Specialist And Generalist Convolution Kernels

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Arxiv Org Pdf 2106

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Pdf Stochastic Filter Groups For Multi Task Cnns Learning Specialist And Generalist Convolution Kernels

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Rameswar Panda

분류 전체보기 카테고리의 글 목록

Rpand002 Github Io Data Neurips Pdf

Shapeshifter Networks Cross Layer Parameter Sharing For Scalable And Effective Deep Learning Arxiv Vanity

Shapeshifter Networks Cross Layer Parameter Sharing For Scalable And Effective Deep Learning Deepai

Papertalk

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Rameswar Panda

Openreview Net Pdf Id Tpfhiknddkx

Adashare Test Py At Master Sunxm2357 Adashare Github

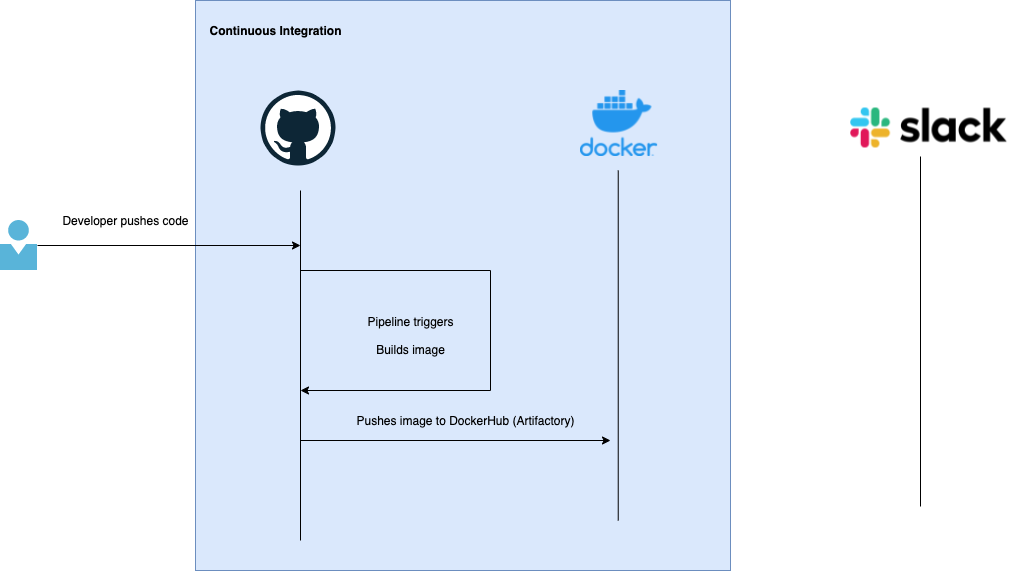

Learn With Me Ci Cd With Kubernetes Part I 99devops

Sxpi7zbm9dlnym

Branched Multi Task Networks Deciding What Layers To Share Deepai

Pdf Parameter Efficient Transfer Learning With Diff Pruning

Ibrahim Aroori Iaroori Twitter

Pdf All At Once Network Quantization Via Collaborative Knowledge Transfer

Deep Learning Resources Handong1587

Handong1587 Github Io 15 10 09 Dl Resources Md At Master Handong1587 Handong1587 Github Io Github

Rameswar Panda

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Multi Task Reinforcement Learning With Soft Modularization Deepai

2

Papertalk

Openreview Net Pdf Id Tpfhiknddkx

Papertalk

Multi Task Reinforcement Learning With Soft Modularization Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

Shapeshifter Networks Cross Layer Parameter Sharing For Scalable And Effective Deep Learning Deepai

Adashare 高效的深度多任务学习 知乎

Github Chamwen Transfer Learning Trends A Collection Of Trends About Transfer Learning

Papertalk

Arxiv Org Pdf 12